|

| By Karen Riccio |

It’s no surprise that AI dominated January’s Consumer Electronics Show (CES) held in Las Vegas.

Many of the 311 exhibitors, mostly startups with a sprinkling of established companies, displayed innovative AI applications and gadgets destined to change how we all live.

They included: a phone app that can translate your baby’s cries and tell you whether they’re hungry, tired or in need of a new diaper; ChatGPT for Volkswagen cars that allows you to ask your car to find a good Mexican restaurant nearby; even a grill that uses AI to barbecue the perfect steak.

Technologies like those above certainly deserve the “Coolest Tech” awards, and I’m sure they grabbed the lion’s share of attention from the event’s 130,000 attendees.

However, the data center-related tools displayed by a few companies get the “Most Necessary” award from yours truly.

I don’t think I’m alone by a long shot.

I’ve said this before, and I’m going to say it again. Like we’ve experienced in the past with new technology, we’ve gotten ahead of ourselves.

AI is currently facing challenges due to a shortage of GPUs — superchips necessary to run the complex, data-intensive models currently being developed and implemented.

That’s why companies that make these powerful superchips captured the most attention and investments in 2023.

The Underbelly of AI Has a Problem

But there’s another problem lurking that hasn’t made headlines or raised red flags — yet. That’s because data centers and their role in everything is a foreign concept to most people.

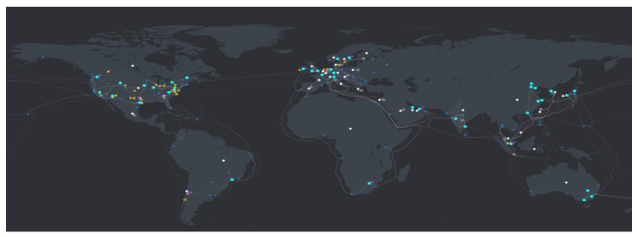

You see, all the computing required by AI can only take place in data centers where hundreds of thousands of processing units, memory and storage devices are amassed in server racks. They are designed to provide a secure and reliable environment for running computer equipment.

They range in size from small cabinets to large “hyperscale” warehouses containing hundreds of thousands of devices. Others, referred to as campuses, span acres.

As you can well imagine, data centers already have a bad reputation as energy hogs. And AI only exacerbates the problem.

AI processors suck up a lot of energy, but they also output an incredible amount of heat.

To prevent the overheating (in other words, melting) of GPUs, data centers must be kept between 64.4 and 71.6 degrees Fahrenheit 24 hours a day, seven days a week. The consequences of not doing so are unfathomable. That’s a piece of cake if you live where the temperature never strays from that range.

But data centers are in every corner of the globe and subject to a variety of climates.

To keep them cool on hot days, data centers need to pump in water — often to a cooling tower outside these warehouse-sized buildings. That’s become increasingly challenging, as well.

Microsoft (MSFT), with 200 data centers across the globe, recently disclosed that its global water consumption spiked 34% from 2021 to 2022 (to nearly 1.7 billion gallons, or more than 2,500 Olympic-sized swimming pools), a sharp increase compared to previous years that outside researchers tie to its AI research.

Another important aspect of keeping data centers cool involves efficiency and sustainability. Off-loading as much data as possible from GPUs and housing more in storage systems — either onsite or offsite — reduces the need for power generation.

So, the IT folks who run data centers have different options to achieve the same goal: to keep all their computers online for every second of every day.

Thanks to the growth of AI, the internet of things (IoT), machine learning and more, data centers are on track to require an estimated 848 terawatt-hours by 2030. Up to 40% will go toward cooling alone.

To put that in perspective, 1 terawatt will cool 500,000 homes for a full year, light more than 1 million homes for a year and fully power 70,000 homes for a year.

To keep up with all of these power requirements, data centers will need to install more fans, air conditioners, cooling systems or other cooling solutions to prevent overheating and damage to the hardware.

Potential Solutions & How to Play Them

There’s no shortage of solutions — or companies that provide them — that include various liquid-based and air-based techniques. Other lesser-known strategies such as building data centers under water, underground, even in abandoned mines are also in practice today.

The idea of space-borne data centers is also being bandied around. Maybe Tesla (TSLA) CEO Elon Musk will play a role someday.

And there are plenty of data centers in need of updated or innovative solutions.

Although the number of individual data centers is falling — from 8.6 million in 2015 to 7.2 million in 2022 — the number of new hyperscale data centers is growing rapidly. At the end of 2022, 700 of these large facilities were in operation. And an average of 16 are coming online every quarter.

Here are two key players in the data center cooling industry that might allow you to tap into a market expected to grow from $12.7 billion in 2023 to $29.6 billion in 2030.

Ohio-based Vertiv Holdings (VRT) is one of the largest providers of liquid cooling technology, the fastest-growing solution for data centers. The $20.5 billion company is well-positioned to tap into a market expected to grow 24% annually over the next five years.

Vertiv boasts a strong balance sheet and positive profits. It has also beaten earnings estimates for three consecutive quarters. VRT will announce Q4 2023 financials on Feb. 20. That’s one to watch.

My other choice in this arena is $13 billion Pure Storage (PSTG). Unlike Vertiv, this California-based company provides data centers with digital storage solutions.

This is particularly important as many techies consider storage as the biggest bottleneck for getting full utilization from their GPUs.

The company has worked with some of the largest AI companies in the world — including Meta Platforms (META) — where it supplies storage for Meta AI’s Research Super Cluster … one of the largest AI supercomputers in the world.

Whether these two go on to become pivotal players in this key undervalued aspect of AI is anyone’s guess. But someone needs to step up and offer solutions for something that will be a vital part of our lives moving forward.

Until next time,

Karen Riccio

P.S. Data storage and cooling are major challenges for the likes of Nvidia (NVDA). But they aren’t the only ones. In fact, your AI startup specialist Chris Graebe just put together a presentation that comes to a startling conclusion: Nvidia could crash on Feb. 28. Click here to check it out.